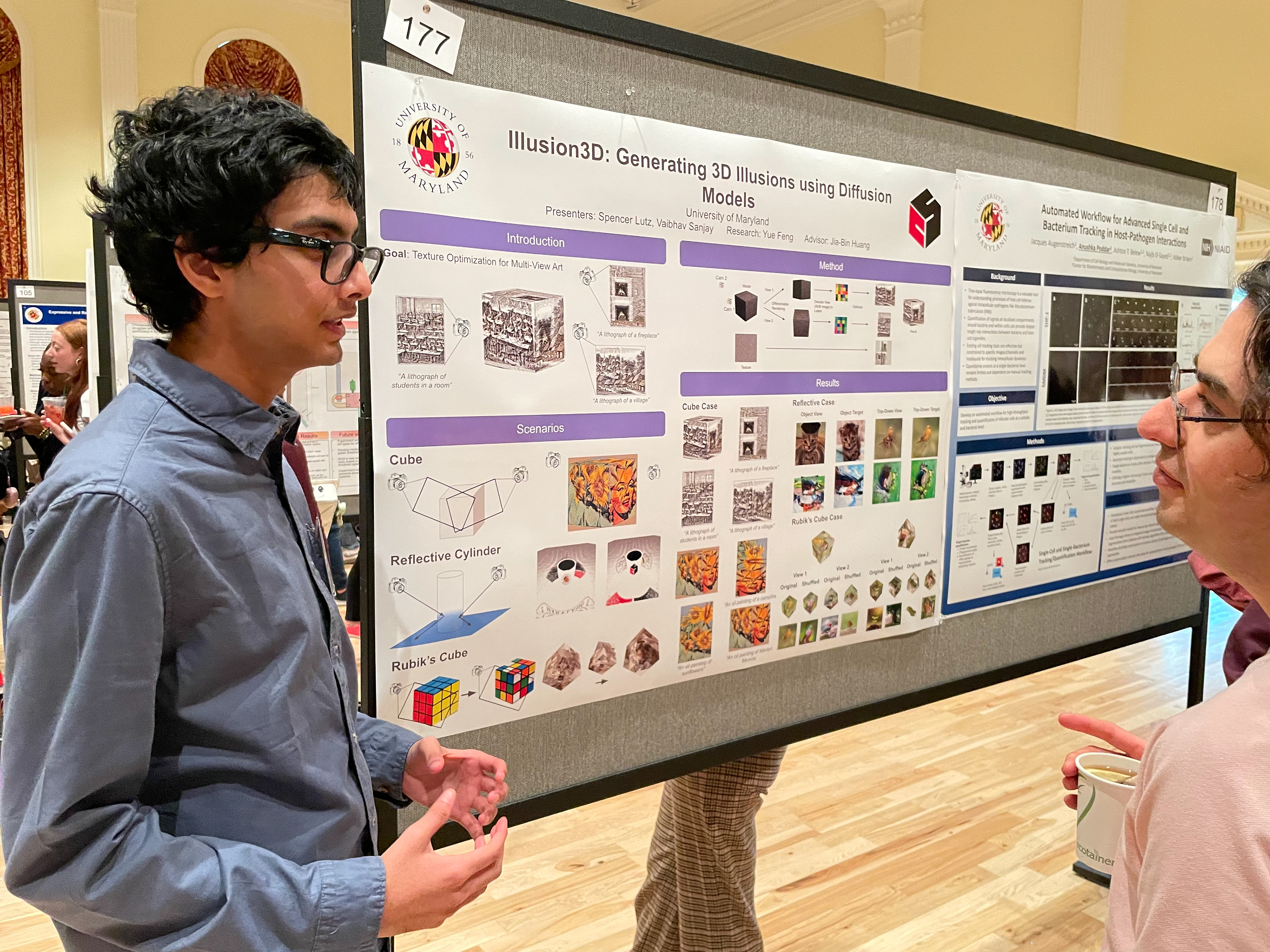

Previous work has shown that we can use diffusion models with a pair of prompts to generate 2D illusions, such as an image that looks like a garden when viewed normally but looks like a bird when rotated 90 degrees. We aimed to extend this idea to 3D. Given the viewing angle of an object and a corresponding text prompt, we optimized the texture to get an “illusion”: viewing the object from various angles resulted in a completely new picture. By representing the color of the 3D object as a multi-resolution texture, we could alternate between several views, generating renders of the object by employing differentiable rendering methods and using Stable Diffusion to optimize the texture based on the respective prompt. Additionally, we applied a camera shift with slight Gaussian noise to all views to remove artifacts and prevent duplication patterns.

I created 3D assets for this work and modified differentiable rendering methods to support reflective surfaces and rotating surfaces (such as the Rubik’s cube). I used a multi-resolution texture to represent the color of the 3D object with differentiable tensors. Our “camera-shift” method achieved the highest CLIP score compared to other methods, and 79% of participants in our user study chose our “camera-shift” method as the one that produced the best illusions. This work led to a conference poster at the UMD Undergraduate Research Day and a submission to SIGGRAPH.